I just caught this sweet indie on Netflix -- my wife picked it, and neither of us knew what to expect. "Take me Home" is not the only independent rom-com we've watched recently, but it stands out as a reminder of what movies (independent or otherwise) can accomplish.

A professor of mine from Brandeis, William Flesch, studies the "darwinian" evolution of stories. Very roughly, he suggests that the stories that survive longest are the ones that somehow beg to be re-told. From there, the analysis gets complicated, as he dives in to what goes in to such a story, and why it works.

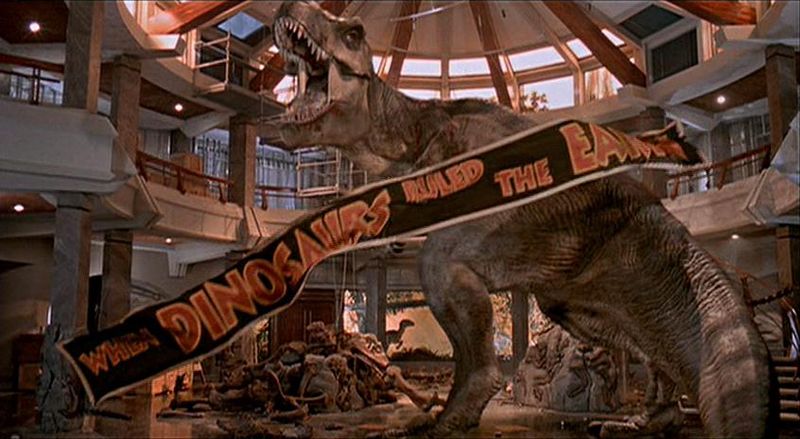

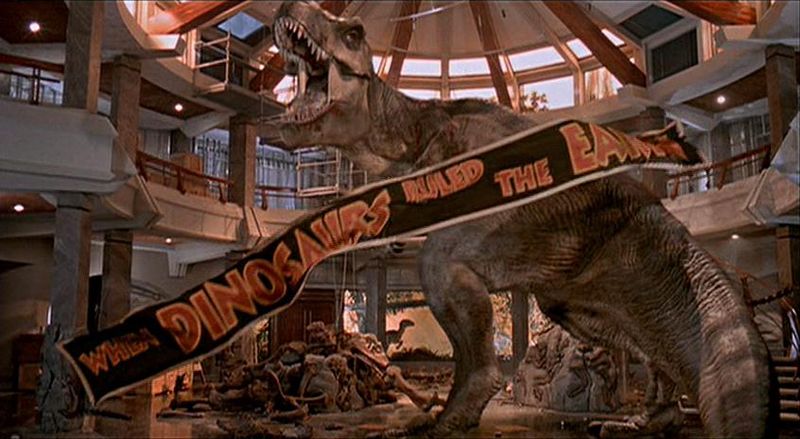

Since first hearing about this theory, I began taking note of what stories have that effect on me. When I was first bitten by the film bug, around age ten, "Jurassic Park" overwhelmed me in this way. I wanted the story told to me over and over (I saw the film twice in theaters, and dozens of times on VHS at home), and I wanted it told in more than one way (I read and re-read Crichton's book until it was tattered, listened to the soundtrack until the tape got thin). I told my friends about it, and since I couldn't tell the story in quite the same way, recommended they see the film, read the book, and thereby receive it themselves.

Now that I'm expecting my first child (due in three days, in fact!) I'm recalling other stories, other narratives that I feel an urge and an eagerness to share. Books and movies that were important to me as a child become a part of what excites me about parenting: I may soon get to share those books and movies with my own kid.

But since I discovered my own interest in filmmaking, in telling stories rather than just receiving them, I've found myself in an unusual position of power. I don't have to pass the stories along any more. I can, to an extent, participate actively in their re-telling.

I had that sensation strongly when I saw Barry Levenson's "Sphere" back in '98. I had loved the book and recommended it to friends (a good story, demanding to be re-told), and had high hopes for the movie version. I was very disappointed, and decided (without irony, in the way a high-schooler dreams of conquering the world) that I'd re-make it someday, and that I'd simply have to do a better job. It wasn't that I felt I could get rich or famous in the process. The story simply hadn't been re-told well enough.

Sometimes, my response is subtler, as is the case with "Take Me Home". I'm writing about the film, partly, because I think it merits being seen (it's a story worth re-telling), but partly because it feels like the kind of story I want to tell. I don't feel a need to re-make the film (it's good enough as it is, after all), but there's something in the underlying structure of the story, something more basic that speaks to me, and that wants to find new expression through my work. Perhaps this is where my professor's analysis might come in handy, dissecting the narrative for its evolutionarily selected genes?

But ultimately, I think that's a big part of my journey as an artist and a storyteller, to find the story I need to tell, and to do what I can to tell it.

-AzS

This blog is not about film reviews. Everyone writes film reviews. In these posts, I will not rate a movie with stars, or thumbs, or tomatoes. I will avoid telling you what you should or shouldn't see. This is a blog for thoughts and discussions about movies -- both current and old. This is a blog for film lovers everywhere to unite in conversation, discussion, and reflection on the art, science, culture and entertainment of moving pictures.

Wednesday, December 19, 2012

Sunday, December 16, 2012

Musing Pictures: The Hobbit: An Unexpected Journey

This is the future of cinema, whether you like it or not.

"The Hobbit: An Unexpected Journey" is the first major film ever shot and released in a format with a frame rate significantly higher than cinema's traditional 24 frames per second. Effects wizard Douglas Trumbull experimented with high frame rate technology in the '70s, creating the proprietary Showscan format, but was never able to utilize it in a feature film.

When a selection of critics and tech geeks got a chance to preview a scene from "The Hobbit" in this new, 48 frames per second format, the response was mixed at best. Many complained that it looked too much like daytime TV, or like a soap opera, and not enough like the cinematic experience it was supposed to be. I have to say, I agree, but only to a point.

There are several factors that taint the high frame rate (HFR) experience in this case.

First, most of us who are seeing "The Hobbit" in theaters grew up with television. I'm not talking about the new, flat-screen, high definition digital stuff. I'm talking, rather, about what used to come over the air to our cathode ray tubes, the big boxes in our living rooms. For much of the history of television in the US, TV was broadcast using a standard called "NTSC", which projected our news and shows at a frame rate of 29.97fps (30fps, in short-hand). This was typically an interlaced image -- each frame was divided in two, and the two halves were projected sequentially, instead of all at once. The frames weren't divided down the middle, but by alternating lines of resolution (so, the first half of the frame would be lines 1, 3, 5, 7, etc., and the second half would be lines 2, 4, 6, 8, etc.) So, although the resolution of a TV image was relatively low compared to what we'd see in theaters, the frame rate was higher (by nearly six frames per second), and the actual rate of flickering was much higher (and therefore much less visible) at nearly 60 half-frames per second. That, to us, subtly defined the television image.

When digital technoloists began to introduce 24fps video in the late '90s, I remember it as a heady time for independent filmmakers. For the first time, we could create content that had the "look" of film without losing the accessibility and cheapness of digital. At the time, David Tames of www.kino-eye.com broke it down for me as follows: We've become programmed by our own experiences. After seeing the news day in and day out in 30fps, we've come to expect fact-based, informational material to come to us at that frame rate. But since we're used to the cinematic experience at 24fps, we expect images that flicker that way to be fictional, to be narrative, and therefore fundamentally different than the 30fps "factual" images.

That has remained fairly true: fiction is shot at 24 frames per second, whereas non-fiction is shot at 30. Now that newer home TVs are capable of showing a 24fps image, the line has started blurring, but this hasn't been the case for long enough for us to un-learn our visual expectations.

So, when we see "The Hobbit" in a higher frame rate, of course it's going to look like TV. The television frame rate is the closest thing we have to compare it to.

(I wonder if this is the case in Europe, where the TV frame rate is 25 frames per second, extremely close to cinema's traditional 24. Do they think "The Hobbit" looks like TV? If you've seen it, and you're European, let me know!)

The second major factor in the 'small screen' feeling many reported in "The Hobbit" is, unfortunately, somewhat the fault of the filmmaker, Peter Jackson. The first act of the film is shot and paced like a 1990s British sitcom, and for that reason, serves as a poor introduction to the high frame rate format. It's where the story begins, so I can't fault Mr. Jackson for starting the film there, but the extended sequence in which Bilbo is visited by dwarves has little of the cinematic flair of other sequences in the film, so it doesn't counteract the sense of "TV" very strongly.

I think high frame rate cinema is here to stay. The images are very crisp, movement is very sharp. In the slow, steady journey towards greater and greater realism, HFR is an important step.

That said, I think it requires a much stronger cinematic filmmaker than Jackson, whose choice of shots and angles is often dissociated with (and occasionally undermines) the mood of the scene. One of the challenges of HFR is that so much within the scene is vividly clear. Jackson provides us with quite a few deep focus shots -- shots where much of what is in frame is in sharp focus. Without a very strong, steady storytelling hand to guide us, we get lost among the details.

Although I find James Cameron's stories frustratingly simplistic, he tells those simple stories very well. His plans to release the next two Avatar films in HFR may provide us with a very different visual-narrative approach with which to judge the format.

For more on high frame rates, here's a very informative website with examples: http://www.red.com/learn/red-101/high-frame-rate-video

-AzS

"The Hobbit: An Unexpected Journey" is the first major film ever shot and released in a format with a frame rate significantly higher than cinema's traditional 24 frames per second. Effects wizard Douglas Trumbull experimented with high frame rate technology in the '70s, creating the proprietary Showscan format, but was never able to utilize it in a feature film.

When a selection of critics and tech geeks got a chance to preview a scene from "The Hobbit" in this new, 48 frames per second format, the response was mixed at best. Many complained that it looked too much like daytime TV, or like a soap opera, and not enough like the cinematic experience it was supposed to be. I have to say, I agree, but only to a point.

There are several factors that taint the high frame rate (HFR) experience in this case.

First, most of us who are seeing "The Hobbit" in theaters grew up with television. I'm not talking about the new, flat-screen, high definition digital stuff. I'm talking, rather, about what used to come over the air to our cathode ray tubes, the big boxes in our living rooms. For much of the history of television in the US, TV was broadcast using a standard called "NTSC", which projected our news and shows at a frame rate of 29.97fps (30fps, in short-hand). This was typically an interlaced image -- each frame was divided in two, and the two halves were projected sequentially, instead of all at once. The frames weren't divided down the middle, but by alternating lines of resolution (so, the first half of the frame would be lines 1, 3, 5, 7, etc., and the second half would be lines 2, 4, 6, 8, etc.) So, although the resolution of a TV image was relatively low compared to what we'd see in theaters, the frame rate was higher (by nearly six frames per second), and the actual rate of flickering was much higher (and therefore much less visible) at nearly 60 half-frames per second. That, to us, subtly defined the television image.

When digital technoloists began to introduce 24fps video in the late '90s, I remember it as a heady time for independent filmmakers. For the first time, we could create content that had the "look" of film without losing the accessibility and cheapness of digital. At the time, David Tames of www.kino-eye.com broke it down for me as follows: We've become programmed by our own experiences. After seeing the news day in and day out in 30fps, we've come to expect fact-based, informational material to come to us at that frame rate. But since we're used to the cinematic experience at 24fps, we expect images that flicker that way to be fictional, to be narrative, and therefore fundamentally different than the 30fps "factual" images.

That has remained fairly true: fiction is shot at 24 frames per second, whereas non-fiction is shot at 30. Now that newer home TVs are capable of showing a 24fps image, the line has started blurring, but this hasn't been the case for long enough for us to un-learn our visual expectations.

So, when we see "The Hobbit" in a higher frame rate, of course it's going to look like TV. The television frame rate is the closest thing we have to compare it to.

(I wonder if this is the case in Europe, where the TV frame rate is 25 frames per second, extremely close to cinema's traditional 24. Do they think "The Hobbit" looks like TV? If you've seen it, and you're European, let me know!)

The second major factor in the 'small screen' feeling many reported in "The Hobbit" is, unfortunately, somewhat the fault of the filmmaker, Peter Jackson. The first act of the film is shot and paced like a 1990s British sitcom, and for that reason, serves as a poor introduction to the high frame rate format. It's where the story begins, so I can't fault Mr. Jackson for starting the film there, but the extended sequence in which Bilbo is visited by dwarves has little of the cinematic flair of other sequences in the film, so it doesn't counteract the sense of "TV" very strongly.

I think high frame rate cinema is here to stay. The images are very crisp, movement is very sharp. In the slow, steady journey towards greater and greater realism, HFR is an important step.

That said, I think it requires a much stronger cinematic filmmaker than Jackson, whose choice of shots and angles is often dissociated with (and occasionally undermines) the mood of the scene. One of the challenges of HFR is that so much within the scene is vividly clear. Jackson provides us with quite a few deep focus shots -- shots where much of what is in frame is in sharp focus. Without a very strong, steady storytelling hand to guide us, we get lost among the details.

Although I find James Cameron's stories frustratingly simplistic, he tells those simple stories very well. His plans to release the next two Avatar films in HFR may provide us with a very different visual-narrative approach with which to judge the format.

For more on high frame rates, here's a very informative website with examples: http://www.red.com/learn/red-101/high-frame-rate-video

-AzS

Wednesday, December 05, 2012

Musing Pictures: Life of Pi

Life of Pi looks wonderful in 3D. Like Avatar, it presents a hyper-real world that is at once authentic and magical. It doesn't push as far as Avatar pushed, not in terms of the visuals, nor the emotional punch, nor (significantly) the action, but it's still a wonderful cinematic experience.

That's not to say the scene isn't legendary. It's one of the most fascinating fight scenes in cinema, but in the context of its story, the attention to beauty overwhelms attention to pace.

In neither case are these scenes particularly emotional. That, I think, remains the most difficult effect to merge with beauty in film.

-AzS

One of the challenges that the film struggles to overcome is the dissonance between beauty and emotional connection. Beauty is deeply emotional, for sure, but when it surrounds a story, it doesn't necessarily inspire the story's emotions.

An example, pointed out by my wife as we walked to our car from the theater, is the scene in which Pi's freighter, which carries his entire family and most of the menagerie from their zoo, sinks in to the Pacific. The scene resolves with Pi, under water, watching the lights of the ship fade in to the blackness.

The image is haunting, beautiful... but ultimately, it inspires awe, rather than sadness.

I've seen very few films that have successfully utilized profoundly beautiful images in conjunction with pivotal emotional moments. Often, we see beautiful landscapes (in anything from The Searchers to Lord of the Rings ), but these set the scene. We see the beautiful landscape, then we move in to the characters and their moment within it.

Even in Independence Day, when the aliens blow up major cities, the effect is one of awe, rather than sadness or grief. For that film, it works, since we're expected to keep our moods light for what turns out to be a very fun, exciting adventure.

Spielberg is the only filmmaker who consistently utilizes beauty and the awe it inspires in conjunction with an emotional payoff. I'm thinking specifically of the astounding climax to Close Encounters of the Third Kind, which is very similar in some ways to the climax of E.T. the Extra-Terrestrial. Elsewhere, awe IS the appropriate reaction (the final T-Rex shot in Jurassic Park comes to mind, when our heroes have already escaped, and we are given a chance to admire the monster without fear).

But isn't beauty something of Ang Lee's signature? I remember the first time I really took note of the director was with his beautiful film, Crouching Tiger, Hidden Dragon. That film is book-ended by two major fight sequences, both incredibly beautiful. One of them, across rooftops in a dark town, demonstrates Lee's strength. The beauty of the sequence serves to underline its magic, and becomes part of the awe of the moment, and of the story. Also, it is beautiful in the way dance is beautiful., so it lends itself to a certain narrative clarity.

The second, atop tall bamboo trees, is graceful, dance-like, and ultimately slows the story down. Beauty, here, becomes a hindrance.

That's not to say the scene isn't legendary. It's one of the most fascinating fight scenes in cinema, but in the context of its story, the attention to beauty overwhelms attention to pace.

In neither case are these scenes particularly emotional. That, I think, remains the most difficult effect to merge with beauty in film.

-AzS

Monday, November 12, 2012

Trailer Talk: Jurassic Park 3D

While I don't want to go in to whether or not it's a "good idea" to re-release Jurassic Park in 3D, I think there's something to be learned from seeing that trailer in a movie theater.

Last night, at an IMAX screening of "Skyfall", I got to see some very familiar images from a movie I must have seen nearly a hundred times. The trailer for the 3D re-release of "Jurassic Park" included some of the film's iconic images, including that of the T-Rex roaring between the two stalled tour cars (at around 1:23). Of course, the experience was very sentimental for me -- those images came from a film that inspired me to pursue a career in cinema. Before I studied any other movie, I studied Jurassic Park. But the T-Rex image gave me pause.

There it was, a beautiful, clean projection on an enormous IMAX screen. And it looked different -- not that it was different than what I remembered, but it was unlike any of the other previews. In fact, it was unlike most of the big, effects-heavy adventure films I've seen over the last few years. There was something huge and magnificent about the image, about the way it was shot, the way it was framed, and the way it was finished. On that screen, the shot was life-sized, and almost had a tangible depth to it. This was not a 3D screening, but "Jurassic Park" wasn't made for 3D, and doesn't need it.

I've been thinking a lot about why that shot (and, in fact, most of the trailer) struck such a chord with me, and I think I've narrowed it down to two things:

1) Increasingly, the small-screen aesthetic informs big-screen movies. Lately, Hollywood has been churning out big special-effects films to try to lure movie lovers back to theaters. Unfortunately, the very filmmaking process is no longer a big-screen process. Movies are shot digitally, reviewed on small screens, edited on small screens, and make much of their money on small screens (TV and digital). The effects can be grand, but there is a grand theatricality that is missing from most newer films. "Jurassic Park" has that grand theatricality, the kind of showmanship that DEMANDS the big screen, and that fills that screen from edge to edge. There were elements of that showmanship in "Skyfall", but only in two or three scenes (fight/action scenes, shot beautifully and with surprising artfulness by Roger Deakins)

It's hard to pinpoint exactly what this showmanship is, or how it emerges. In the case of the T-Rex shot, it's a combination of camera angle, blocking, lighting, and probably a half-dozen other elements that make for not just a moving image, but a portrait of the event. It's magnificent.

2) Digital special effects have yet to master the tangibility of practical effects. Although the T-Rex in the shot is digital, there's much more reality in that shot (and in the film) than virtual-reality. Most of what we see was there to imprint on celluloid. I think digital effects can achieve this, but only if that's a priority for the filmmaker. For the most part, it seems to me that the filmmakers of current special effects films are more interested in the freedom to achieve the impossible than in the effort of making the impossible seem real and present in the film.

There's a part of me that's a little disappointed that this 20th anniversary release of Jurassic Park is a 3D release, and not simply a 2D re-release of the original film. It could teach us so much about what we should be doing with the special effects miracles at our disposal.

-AzS

Last night, at an IMAX screening of "Skyfall", I got to see some very familiar images from a movie I must have seen nearly a hundred times. The trailer for the 3D re-release of "Jurassic Park" included some of the film's iconic images, including that of the T-Rex roaring between the two stalled tour cars (at around 1:23). Of course, the experience was very sentimental for me -- those images came from a film that inspired me to pursue a career in cinema. Before I studied any other movie, I studied Jurassic Park. But the T-Rex image gave me pause.

There it was, a beautiful, clean projection on an enormous IMAX screen. And it looked different -- not that it was different than what I remembered, but it was unlike any of the other previews. In fact, it was unlike most of the big, effects-heavy adventure films I've seen over the last few years. There was something huge and magnificent about the image, about the way it was shot, the way it was framed, and the way it was finished. On that screen, the shot was life-sized, and almost had a tangible depth to it. This was not a 3D screening, but "Jurassic Park" wasn't made for 3D, and doesn't need it.

I've been thinking a lot about why that shot (and, in fact, most of the trailer) struck such a chord with me, and I think I've narrowed it down to two things:

1) Increasingly, the small-screen aesthetic informs big-screen movies. Lately, Hollywood has been churning out big special-effects films to try to lure movie lovers back to theaters. Unfortunately, the very filmmaking process is no longer a big-screen process. Movies are shot digitally, reviewed on small screens, edited on small screens, and make much of their money on small screens (TV and digital). The effects can be grand, but there is a grand theatricality that is missing from most newer films. "Jurassic Park" has that grand theatricality, the kind of showmanship that DEMANDS the big screen, and that fills that screen from edge to edge. There were elements of that showmanship in "Skyfall", but only in two or three scenes (fight/action scenes, shot beautifully and with surprising artfulness by Roger Deakins)

It's hard to pinpoint exactly what this showmanship is, or how it emerges. In the case of the T-Rex shot, it's a combination of camera angle, blocking, lighting, and probably a half-dozen other elements that make for not just a moving image, but a portrait of the event. It's magnificent.

2) Digital special effects have yet to master the tangibility of practical effects. Although the T-Rex in the shot is digital, there's much more reality in that shot (and in the film) than virtual-reality. Most of what we see was there to imprint on celluloid. I think digital effects can achieve this, but only if that's a priority for the filmmaker. For the most part, it seems to me that the filmmakers of current special effects films are more interested in the freedom to achieve the impossible than in the effort of making the impossible seem real and present in the film.

There's a part of me that's a little disappointed that this 20th anniversary release of Jurassic Park is a 3D release, and not simply a 2D re-release of the original film. It could teach us so much about what we should be doing with the special effects miracles at our disposal.

-AzS

Musing Pictures: Lincoln

I was struck, as I drove away from the theater after seeing "Lincoln", by how few "point of view" shots were used. In fact, it has taken me hours to find the one moment in the film where the POV shot becomes central to the visual narrative scheme. Although there are a couple of other scenes that may include POV shots, it's just as likely that they're simply close angles -- there may only be one point of view moment in the entire film!

Steven Spielberg is not one to shy away from POV shots. In fact, some of his most famous moments (such as the shark attack at a crowded beach in "Jaws" or the rearview mirror shots in "Duel", "The Sugarland Express", and "Jurassic Park") feature POV shots. As I've written elsewhere, Spielberg uses these in combination with what I've termed "point of thought", camera placement and movement that evokes a character's mood or feeling, rather than literal point of view.

Spielberg's treatment of "Lincoln" relies heavily (and unsurprisingly) on this "point of thought" approach, but it's not Lincoln the character whose thoughts we're invited to join. The most iconic and memorable images of Lincoln (played brilliantly by Daniel Day-Lewis) are all from behind him, from over his shoulder. He would be in profile, but he's looking away, looking forward, to the future perhaps. We see the back of Lincoln's head, and sometimes a sliver of his profile. This isn't necessarily how others in the room see him, but it's how they feel about him. To those around him Lincoln is a dreamer, always looking forward, away, to the future.

Since Lincoln is presented to us in the way that those in the room feel about him, we have a sense of general mood or general attitudes, but not necessarily a strong allegiance to any one character's point of view. It's part of the film's attempt to present the story without too much mythology. It contemplates Lincoln the man, rather than Lincoln the legend.

But Lincoln the man becomes Lincoln the legend, and the transition happens as he leaves the White House, headed for the inevitable at the Ford Theatre. As he walks away, his black servant watches. And it's the black servant whose point of view we see: Abraham Lincoln, lanky, iconic, walking alone down a long hallway. In other words, we can never understand Lincoln fully without glimpsing him, even briefly, through a black man's eyes.

-AzS

Steven Spielberg is not one to shy away from POV shots. In fact, some of his most famous moments (such as the shark attack at a crowded beach in "Jaws" or the rearview mirror shots in "Duel", "The Sugarland Express", and "Jurassic Park") feature POV shots. As I've written elsewhere, Spielberg uses these in combination with what I've termed "point of thought", camera placement and movement that evokes a character's mood or feeling, rather than literal point of view.

Spielberg's treatment of "Lincoln" relies heavily (and unsurprisingly) on this "point of thought" approach, but it's not Lincoln the character whose thoughts we're invited to join. The most iconic and memorable images of Lincoln (played brilliantly by Daniel Day-Lewis) are all from behind him, from over his shoulder. He would be in profile, but he's looking away, looking forward, to the future perhaps. We see the back of Lincoln's head, and sometimes a sliver of his profile. This isn't necessarily how others in the room see him, but it's how they feel about him. To those around him Lincoln is a dreamer, always looking forward, away, to the future.

Since Lincoln is presented to us in the way that those in the room feel about him, we have a sense of general mood or general attitudes, but not necessarily a strong allegiance to any one character's point of view. It's part of the film's attempt to present the story without too much mythology. It contemplates Lincoln the man, rather than Lincoln the legend.

But Lincoln the man becomes Lincoln the legend, and the transition happens as he leaves the White House, headed for the inevitable at the Ford Theatre. As he walks away, his black servant watches. And it's the black servant whose point of view we see: Abraham Lincoln, lanky, iconic, walking alone down a long hallway. In other words, we can never understand Lincoln fully without glimpsing him, even briefly, through a black man's eyes.

-AzS

Musing Pictures: Skyfall (2012)

I didn't think I'd be writing about plot holes when writing about "Skyfall", but some comments on plot holes by Sean Quinn (who I only know as @nachofiesta on twitter) got me thinking.

Here's the background: Scott Feinberg (@scottfeinberg, who I know from Brandeis) got in to a bit of an exchange with Mr. Quinn on Twitter after noticing this:

@nachofiesta: Another movie that's complete shit is Marathon Man. God damn that's a terrible movie.

When Feinberg pressed Quinn for an explanation for this surprising assertion (since Marathon Man is sacred ground to many cinephiles), the response was very specific:

@nachofiesta: So many gigantic plot holes.

Well. A plot hole does not a lousy movie make.

I think back to films with plot "issues" that are considered great films. "Batman Begins" has a thirty-minute climax that makes no physical sense (wouldn't a giant microwave boil people, too?). "The Big Sleep" famously doesn't seem to have much of a coherent plot altogether, and it's downright canonical.

Now, I think plot holes do have their impact, but only if the films are weak, or lack narrative conviction.

Plot holes are a kind of continuity problem. With visual continuity, we expect to see visual elements play themselves out in a natural, uninterrupted, contiguous way. A lit cigarette should get shorter and shorter with every shot. If it's a little shorter in one shot, then long again in the next, the discontinuity can be distracting, and can remind us that we're watching a film -- a construct (and if we can see the seams, it's not a very good one, is it?)

But we often miss these continuity "mistakes", especially when they're in great films. My favorite example is in Jurassic Park, during the fantastic T-Rex attack sequence. It starts with a T-Rex walking through a fence, and it ends with a car being pushed off a cliff. Trouble is, the T-Rex entered the scene from exactly the same spot where the car plummets at the end. It's complete spatial nonsense, but many people don't see it, even once it's been pointed out to them. The scene is extremely engrossing, made with conviction, so we don't spend time looking for the seams. We're absorbed, enthralled, lapping it up.

Unlike spatial continuity, plot holes can be much more forgiving. Usually, plot holes are moments where certain decisions or opinions or actions don't seem to make sense in the context of a character or story. They don't indicate a violation of hard-and-fast rules, but rather critical omissions.

Here's where this connects to "Skyfall". Not far in to the film, James Bond is shot at by a bad guy. The bullet breaks up, and he's hit by fragments. A while later, with MI6 in jeopardy, Bond gives them some of these bullet fragments to analyze. The results of the analysis are very new to MI6, and allow them to quickly narrow down their search for the bad guy. But of course, in the big scene where Bond is shot at, the same bad guy fires off hundreds more rounds. MI6 couldn't trace one of those hundreds of bullets?

Here's how we forgive plot holes: We get the facts of the story that are presented to us, but we know there's more to the story than what we're getting (at least, that's the way movies work these days -- we get a selection of shots that show us a scene, then another scene, then another, with sometimes vast gaps of time and space between them. We know there's more to the stories.) When we find a gap, we fill it in. "Plot holes" are just a little more difficult to fill in. In this case, I'm sure I could come up with reasons why MI6 didn't trace the bullet fragments. Maybe they were busy with other stuff? Who knows?

Who cares?

Ultimately, THAT's what does it. If you come across a plot hole and you care enough that it distracts you from the immersive experience of the story, it'll rip you right out of the film. But if you'd rather the story continue, you let it continue and ignore the discontinuity.

It's like when you're dreaming, and it's morning, and you're kind of waking up, but the dream is so good, so you keep sleeping. Sure, you're kind of awake, so the dream isn't really a dream any more, but if you like the dream, or if you care about where it's going, you'll do whatever you can to stay in that dream world just a little longer.

So, when it comes to Sean Quinn's experience of "Marathon Man", I think something very sad happened. Whereas most people who saw that film found the experience engrossing enough to lose themselves in it despite the plot holes, Quinn couldn't overcome the realization that the story doesn't come together enough. It's sad because ultimately, we're all looking for that transportive experience. Sometimes, if you watch films too closely, too analytically, they can lose their magic.

And what about "Skyfall"? I think I was lucky. I caught the silly moment in the plot, and I considered letting it bother me, but I really wanted to enjoy this film, and so much of it had thrilled me already, so I let it go. On the drive home from the theater, I mulled the possibility of writing about the cinematography, or the unusually "artsy" action set-pieces, or any of a number of other topics. The "plot hole" was far from my mind minutes after the scene ended, and wouldn't have re-entered my thoughts had Sean Quinn not gone on about plot holes.

-AzS

Here's the background: Scott Feinberg (@scottfeinberg, who I know from Brandeis) got in to a bit of an exchange with Mr. Quinn on Twitter after noticing this:

@nachofiesta: Another movie that's complete shit is Marathon Man. God damn that's a terrible movie.

When Feinberg pressed Quinn for an explanation for this surprising assertion (since Marathon Man is sacred ground to many cinephiles), the response was very specific:

@nachofiesta: So many gigantic plot holes.

Well. A plot hole does not a lousy movie make.

I think back to films with plot "issues" that are considered great films. "Batman Begins" has a thirty-minute climax that makes no physical sense (wouldn't a giant microwave boil people, too?). "The Big Sleep" famously doesn't seem to have much of a coherent plot altogether, and it's downright canonical.

Now, I think plot holes do have their impact, but only if the films are weak, or lack narrative conviction.

Plot holes are a kind of continuity problem. With visual continuity, we expect to see visual elements play themselves out in a natural, uninterrupted, contiguous way. A lit cigarette should get shorter and shorter with every shot. If it's a little shorter in one shot, then long again in the next, the discontinuity can be distracting, and can remind us that we're watching a film -- a construct (and if we can see the seams, it's not a very good one, is it?)

But we often miss these continuity "mistakes", especially when they're in great films. My favorite example is in Jurassic Park, during the fantastic T-Rex attack sequence. It starts with a T-Rex walking through a fence, and it ends with a car being pushed off a cliff. Trouble is, the T-Rex entered the scene from exactly the same spot where the car plummets at the end. It's complete spatial nonsense, but many people don't see it, even once it's been pointed out to them. The scene is extremely engrossing, made with conviction, so we don't spend time looking for the seams. We're absorbed, enthralled, lapping it up.

Unlike spatial continuity, plot holes can be much more forgiving. Usually, plot holes are moments where certain decisions or opinions or actions don't seem to make sense in the context of a character or story. They don't indicate a violation of hard-and-fast rules, but rather critical omissions.

Here's where this connects to "Skyfall". Not far in to the film, James Bond is shot at by a bad guy. The bullet breaks up, and he's hit by fragments. A while later, with MI6 in jeopardy, Bond gives them some of these bullet fragments to analyze. The results of the analysis are very new to MI6, and allow them to quickly narrow down their search for the bad guy. But of course, in the big scene where Bond is shot at, the same bad guy fires off hundreds more rounds. MI6 couldn't trace one of those hundreds of bullets?

Here's how we forgive plot holes: We get the facts of the story that are presented to us, but we know there's more to the story than what we're getting (at least, that's the way movies work these days -- we get a selection of shots that show us a scene, then another scene, then another, with sometimes vast gaps of time and space between them. We know there's more to the stories.) When we find a gap, we fill it in. "Plot holes" are just a little more difficult to fill in. In this case, I'm sure I could come up with reasons why MI6 didn't trace the bullet fragments. Maybe they were busy with other stuff? Who knows?

Who cares?

Ultimately, THAT's what does it. If you come across a plot hole and you care enough that it distracts you from the immersive experience of the story, it'll rip you right out of the film. But if you'd rather the story continue, you let it continue and ignore the discontinuity.

It's like when you're dreaming, and it's morning, and you're kind of waking up, but the dream is so good, so you keep sleeping. Sure, you're kind of awake, so the dream isn't really a dream any more, but if you like the dream, or if you care about where it's going, you'll do whatever you can to stay in that dream world just a little longer.

So, when it comes to Sean Quinn's experience of "Marathon Man", I think something very sad happened. Whereas most people who saw that film found the experience engrossing enough to lose themselves in it despite the plot holes, Quinn couldn't overcome the realization that the story doesn't come together enough. It's sad because ultimately, we're all looking for that transportive experience. Sometimes, if you watch films too closely, too analytically, they can lose their magic.

And what about "Skyfall"? I think I was lucky. I caught the silly moment in the plot, and I considered letting it bother me, but I really wanted to enjoy this film, and so much of it had thrilled me already, so I let it go. On the drive home from the theater, I mulled the possibility of writing about the cinematography, or the unusually "artsy" action set-pieces, or any of a number of other topics. The "plot hole" was far from my mind minutes after the scene ended, and wouldn't have re-entered my thoughts had Sean Quinn not gone on about plot holes.

-AzS

Friday, November 09, 2012

Musing Pictures: A Hijacking (2012)

I caught this wonderful Danish film at the tail end of the 2012 AFI film fest. It is what it claims to be: a film about the hijacking of a Danish ship, and about the subsequent negotiations between the pirates and the company's CEO, Peter Ludvigsen (played stoically by Soren Malling).

I particularly loved the work of the sound department, Morten Green and Oskar Skriver, who introduce auditory elements that are at once very real, and very new to cinema.

There are quite a few scenes in which the Peter speaks on the phone with the man claiming to be the pirate's "negotiator", a shrewd manipulator named Omar (who, as yet, is not credited on IMDB, which only lists a cast of six, although there were at least a dozen speaking roles in the film). These conversations are had on a speaker-phone, so the rest of the response team in Denmark can listen in, and presumably, so that we can listen in as well. They are tense, of course, and they look pretty much standard, as far as tense hostage negotiation phone calls go. But they sound very different.

This is a long-distance call, probably via satellite, to a ship in the Indian Ocean, and we hear that vast distance. The calls are full of pops, whistles and static. Most notably, every time Peter speaks, he hears his own echo a second later (something we may be familiar with as an occasional glitch with our cell phones, or with Skype). The absolutely amazing part of it, though, is that we can still understand what Peter is saying. The choice of sounds, and the way they're mixed together make it so that despite the noise, the voices are clear. This, to me, is a major accomplishment for the sound team, and something I've never heard in a movie before. Usually, voices are clear and there isn't much noise, or the noise is apparent and it's hard to make out the words being spoken.

Be nice to see what the director, Tobias Lindholm, comes up with next... I hope he keeps the same sound team!

-AzS

ADDED 6/25/2013: I wrote an additional musing about "A Hijacking" around the time of its US theatrical release, published at Musing Pictures' new home, MaxIt Magazine: http://maxitmagazine.com/columns/musing-pictures/524-a-hijacking

I particularly loved the work of the sound department, Morten Green and Oskar Skriver, who introduce auditory elements that are at once very real, and very new to cinema.

There are quite a few scenes in which the Peter speaks on the phone with the man claiming to be the pirate's "negotiator", a shrewd manipulator named Omar (who, as yet, is not credited on IMDB, which only lists a cast of six, although there were at least a dozen speaking roles in the film). These conversations are had on a speaker-phone, so the rest of the response team in Denmark can listen in, and presumably, so that we can listen in as well. They are tense, of course, and they look pretty much standard, as far as tense hostage negotiation phone calls go. But they sound very different.

This is a long-distance call, probably via satellite, to a ship in the Indian Ocean, and we hear that vast distance. The calls are full of pops, whistles and static. Most notably, every time Peter speaks, he hears his own echo a second later (something we may be familiar with as an occasional glitch with our cell phones, or with Skype). The absolutely amazing part of it, though, is that we can still understand what Peter is saying. The choice of sounds, and the way they're mixed together make it so that despite the noise, the voices are clear. This, to me, is a major accomplishment for the sound team, and something I've never heard in a movie before. Usually, voices are clear and there isn't much noise, or the noise is apparent and it's hard to make out the words being spoken.

Be nice to see what the director, Tobias Lindholm, comes up with next... I hope he keeps the same sound team!

-AzS

ADDED 6/25/2013: I wrote an additional musing about "A Hijacking" around the time of its US theatrical release, published at Musing Pictures' new home, MaxIt Magazine: http://maxitmagazine.com/columns/musing-pictures/524-a-hijacking

Thursday, November 08, 2012

Trailer Talk: "World War Z"

The recently released trailer for "World War Z" utilizes a similar musical motif to that used by "Promethius", but not to the same effect.

First, the Prometheus trailer. Listen, particularly, to the music in the final 20 seconds:

Next World War Z, listen at 1:50:

No, it's not the same sound, but they're definitely imitating.

When the Prometheus trailer hit theaters, the web buzzed with anticipation, and much of the talk had to do with that primal, primitive, scream-like sound used in the trailer's music. People bought the soundtrack before seeing the film, hoping to get that haunting, frightening score, but as it turns out, it was just a music track the trailer-editing company dropped in to the trailer, and did not come from the film at all.

I think the "World War Z" trailer is clearly imitating the effect used in the "Prometheus" trailer, but whereas the "Prometheus" effect sounds like a weird, alien scream, the musical effect in the "World War Z" trailer just sounds like a slightly upset whale. I don't think it works here -- at least, not nearly as powerfully or viscerally as in the "Prometheus" trailer.

That's not to say that "World War Z" doesn't look exciting. But if you're going to "borrow" a creative impulse from an earlier source (and let's face it, much of art requires this kind of "borrowing"), make sure it works in the context of what you're doing!

Frankly, with the ant-swarm-like imagery, I think something more in-line with the snake-pit music from "Raiders of the Lost Ark" might have been creepier...

What do you think?

-AzS

Next World War Z, listen at 1:50:

No, it's not the same sound, but they're definitely imitating.

When the Prometheus trailer hit theaters, the web buzzed with anticipation, and much of the talk had to do with that primal, primitive, scream-like sound used in the trailer's music. People bought the soundtrack before seeing the film, hoping to get that haunting, frightening score, but as it turns out, it was just a music track the trailer-editing company dropped in to the trailer, and did not come from the film at all.

I think the "World War Z" trailer is clearly imitating the effect used in the "Prometheus" trailer, but whereas the "Prometheus" effect sounds like a weird, alien scream, the musical effect in the "World War Z" trailer just sounds like a slightly upset whale. I don't think it works here -- at least, not nearly as powerfully or viscerally as in the "Prometheus" trailer.

That's not to say that "World War Z" doesn't look exciting. But if you're going to "borrow" a creative impulse from an earlier source (and let's face it, much of art requires this kind of "borrowing"), make sure it works in the context of what you're doing!

Frankly, with the ant-swarm-like imagery, I think something more in-line with the snake-pit music from "Raiders of the Lost Ark" might have been creepier...

What do you think?

-AzS

Wednesday, November 07, 2012

Musing Pictures: The Rules of the Game (1939)

I was fortunate to catch Jean Renoir's 1939 classic, "The Rules of the Game" at this year's AFI Film Festival. They showed a very watchable, newly restored print of the French film. I say "very watchable" because many earlier prints were in very bad shape (including those from which VHS and early DVD copies were made.) The film itself has a unique and unusual story -- it was thought to be lost when the originals were destroyed during allied bombing in World War II. After the war, bits and pieces of the film were reassembled from partial copies and fragments, often with Renoir's explicit feedback. It added a layer of magic to see a film that was really a re-construction of itself. There's no way to know how close the re-construction is to the original. Would we think Frankenstein's monster was a human if we had never seen a human?

I wonder this, too, because I can't imagine an artist, given the opportunity to tweak his own work, would re-construct it (or agree to its re-construction) without tinkering here and there. I know that if I were to re-construct any of my own films, they would all certainly take on slightly different forms. Some scenes might be cut short, others might be re-arranged. And it's not always the best thing for the movie.

We see this, in a different way, with re-releases of certain films. When Lucas restored "Star Wars" in the '90s, he re-released those films with significant changes. What we got to see in theaters weren't merely restorations, but updates -- films that contained scenes and effects that never came close to appearing in the original. As a result, some of the power of "Star Wars" got somewhat drained out of the film: Han Solo's famous shot in the Cantina is somehow fired in self-defense, the creatures of the film which were once so tangible (because they were puppets) are now ethereal (because they're CGI animation), etc. When Spielberg restored and re-released ET, we were presented with some cute scenes that weren't a part of the original film. Also, the police officers who had been toting guns originally, were now holding walkie-talkies. The sense of jeopardy just isn't the same.

How can we know that Renoir's re-constructed film is true to his original creation? Frankly, we can't. The original is lost, and was actually seen by very few to begin with. In this particular case, it's the re-construction itself that is the masterpiece.

-AzS

I wonder this, too, because I can't imagine an artist, given the opportunity to tweak his own work, would re-construct it (or agree to its re-construction) without tinkering here and there. I know that if I were to re-construct any of my own films, they would all certainly take on slightly different forms. Some scenes might be cut short, others might be re-arranged. And it's not always the best thing for the movie.

We see this, in a different way, with re-releases of certain films. When Lucas restored "Star Wars" in the '90s, he re-released those films with significant changes. What we got to see in theaters weren't merely restorations, but updates -- films that contained scenes and effects that never came close to appearing in the original. As a result, some of the power of "Star Wars" got somewhat drained out of the film: Han Solo's famous shot in the Cantina is somehow fired in self-defense, the creatures of the film which were once so tangible (because they were puppets) are now ethereal (because they're CGI animation), etc. When Spielberg restored and re-released ET, we were presented with some cute scenes that weren't a part of the original film. Also, the police officers who had been toting guns originally, were now holding walkie-talkies. The sense of jeopardy just isn't the same.

How can we know that Renoir's re-constructed film is true to his original creation? Frankly, we can't. The original is lost, and was actually seen by very few to begin with. In this particular case, it's the re-construction itself that is the masterpiece.

-AzS

Thursday, October 18, 2012

Musing Pictures: H+ Episode 15

No, it's not a movie. It's an episode of Brian Singer's web series on YouTube. But I was struck by something here -- let's see if you see it, too.

Robert Altman is considered a pioneer of overlapping dialog, scenes in which several characters hold parallel conversations at the same time. Altman made sure to put microphones on all of his characters, so when it came time to mix the audio, he could bring up a word here or a phrase there, and guide our listening. We'd hear a cacophony of voices, but with Altman's help, we'd be able to tease out the bits of each conversation that were important.

Singer, in this episode of "H+", seems to be playing a similar game. The conceit, of course, is that characters have computers implanted in their brains that allow them to communicate with each other, "digitally", in addition to their face-to-face interactions, as if they have Skype in their heads.

In this scene, I count at least three conversations, possibly four or five, two of which we are at least partially privy to. There's the verbal conversation about the patient and her baby, and that's the obvious (and standard) conversation. Then, there's the bearded guy on the right, who seems to be communicating via notes to someone else on the network. The other bearded guy, clearly distracted, seems to be communicating with someone, too, and the patient is either communicating or observing something through her own implant.

What struck me here is that unlike Altman's scenes, where we need to listen to multiple conversations, but where we are guided to what we should pay attention to, here we have two modes of communication: verbal and written, and it appears that we need to pay attention to both. We're not given one over the other, in the way Altman might mix certain words or phrases so they're clearer than the babble in the room. It's confusing, but it's also very well-integrated in to the narrative itself: The implants provide people with multiple, simultaneous layers of information, sometimes to the point of distraction. And that, of course, is a stand-in for our experience of the internet, a vast source of all the information in the world, available to us all at once, but how could we possibly process it all? Are we being overwhelmed by information?

What I don't know is whether or not the effect is intentional here, or is it yet another side-effect of the information age?

-AzS

Robert Altman is considered a pioneer of overlapping dialog, scenes in which several characters hold parallel conversations at the same time. Altman made sure to put microphones on all of his characters, so when it came time to mix the audio, he could bring up a word here or a phrase there, and guide our listening. We'd hear a cacophony of voices, but with Altman's help, we'd be able to tease out the bits of each conversation that were important.

Singer, in this episode of "H+", seems to be playing a similar game. The conceit, of course, is that characters have computers implanted in their brains that allow them to communicate with each other, "digitally", in addition to their face-to-face interactions, as if they have Skype in their heads.

In this scene, I count at least three conversations, possibly four or five, two of which we are at least partially privy to. There's the verbal conversation about the patient and her baby, and that's the obvious (and standard) conversation. Then, there's the bearded guy on the right, who seems to be communicating via notes to someone else on the network. The other bearded guy, clearly distracted, seems to be communicating with someone, too, and the patient is either communicating or observing something through her own implant.

What struck me here is that unlike Altman's scenes, where we need to listen to multiple conversations, but where we are guided to what we should pay attention to, here we have two modes of communication: verbal and written, and it appears that we need to pay attention to both. We're not given one over the other, in the way Altman might mix certain words or phrases so they're clearer than the babble in the room. It's confusing, but it's also very well-integrated in to the narrative itself: The implants provide people with multiple, simultaneous layers of information, sometimes to the point of distraction. And that, of course, is a stand-in for our experience of the internet, a vast source of all the information in the world, available to us all at once, but how could we possibly process it all? Are we being overwhelmed by information?

What I don't know is whether or not the effect is intentional here, or is it yet another side-effect of the information age?

-AzS

Sunday, October 14, 2012

Musing Pictures: Split: A Deeper Divide

Twice today, I encountered the media blaming itself for divisiveness in American politics. First, on the radio, an NPR show (possibly "On the Media", but I'm not certain), the anchor wrapped up a presentation on misinformation in campaign advertisements with a hesitant conclusion: "I blame the media!" I heard this on the way to a screening of the documentary, "Split: A Deeper Divide," produced by my friend, Jeff Beard.

"Split" explores the rancorous and divisive tone taken by American politics in recent years. It suggests numerous reasons for this polarization, including the media's need to play to increasingly specific and narrowly defined target audiences. Basically, with so many media options, it's more cost effective for news providers to tailor their news to a narrowly defined audience, rather than trying to provide a more broad-based message.

It's intriguing to me to encounter media that challenges The Media in this regard. I heard it in the anchor's voice on the NPR radio show: an awareness of the irony, and perhaps of the mild hypocrisy of stating "I blame the media" on the air. The statement is itself a blanket accusation, leveled flatly, without nuance, at an entire, enormous, diverse industry. Isn't that the kind of new communication style that the media thrives on these days, that feeds audience's hunger for tension, for a good fight?

One of the things I appreciate about "Split" is that it takes a very even, non-judgmental look at the factors that contribute to American political divisiveness. It blames the media without needing to resort to the blanket statement "I blame the media!" Of course, in an atmosphere informed by stand-offish argumentativeness, this gives the film a bit of a dated feel, as if it's a '70s schoolroom film-strip, rather than a twenty-first century documentary. But in a way, that's the best part about it. It's not cynical about its own message (even if it is The Media, and as such, part of the problem.)

"Split" is making its way to various cities this election year. If you're looking to understand what's making this country suffocate on its own hot air, I'd encourage you to check the film out. The screening schedule is available on the film's website.

And for a snapshot, here's the trailer:

-AzS

"Split" explores the rancorous and divisive tone taken by American politics in recent years. It suggests numerous reasons for this polarization, including the media's need to play to increasingly specific and narrowly defined target audiences. Basically, with so many media options, it's more cost effective for news providers to tailor their news to a narrowly defined audience, rather than trying to provide a more broad-based message.

It's intriguing to me to encounter media that challenges The Media in this regard. I heard it in the anchor's voice on the NPR radio show: an awareness of the irony, and perhaps of the mild hypocrisy of stating "I blame the media" on the air. The statement is itself a blanket accusation, leveled flatly, without nuance, at an entire, enormous, diverse industry. Isn't that the kind of new communication style that the media thrives on these days, that feeds audience's hunger for tension, for a good fight?

One of the things I appreciate about "Split" is that it takes a very even, non-judgmental look at the factors that contribute to American political divisiveness. It blames the media without needing to resort to the blanket statement "I blame the media!" Of course, in an atmosphere informed by stand-offish argumentativeness, this gives the film a bit of a dated feel, as if it's a '70s schoolroom film-strip, rather than a twenty-first century documentary. But in a way, that's the best part about it. It's not cynical about its own message (even if it is The Media, and as such, part of the problem.)

"Split" is making its way to various cities this election year. If you're looking to understand what's making this country suffocate on its own hot air, I'd encourage you to check the film out. The screening schedule is available on the film's website.

And for a snapshot, here's the trailer:

-AzS

Thursday, October 11, 2012

Musing Pictures: Looper

Like any really good sci-fi, "Looper" (directed by Rian Johnson, whose debut feature, Brick, is the millennial generation's El Mariachi) isn't really about science fiction. Instead, it takes a common, compelling narrative theme (in this case, the "what if?" scenario of second chances) and re-frames it literally, using the elements of sci-fi to accomplish what "regular" fiction can only do with metaphors.

Time travel represents a unique narrative opportunity (paradoxes aside) to push "what if?" scenarios to the extreme. What if you could go back in time to rectify a past wrong? To make bad things better? To intercede on your own behalf, or on behalf of others?

Undeveloped narratives (such as my own film, "Paradox in Purple", made in 11th grade) focus on the paradox itself, using it as a cautionary mechanism: don't mess with the past (as if we could!) lest your changes come back to haunt you! In real terms, it's a caution not to worry ourselves with the past, since the path that leads to our present is complex beyond our capacity to comprehend. The paradox-centric time travel narrative is there to pacify those of us who wish we had done things differently, or that others had done things differently on our behalf. We rest easier at night knowing that our present coordinates are the sum of far more complex elements than a few moments in time.

"Looper" is a mature time travel narrative. The paradox is not the obsessive focus of the story, and the painful lesson that the past is unchangeable is not a part of this film. "Looper" avoids the issue by keeping its story rooted in "the past". "The Terminator" does the same thing, posing the same fundamental question: If we know the future, and we know it's bad, what is the extent of our responsibility to change it? In other words, rather than asking "what if we could change the past?" the film asks "what if we could change the future?"

What I like about "Looper" is that it pushes this question to such an extreme that it becomes, yet again, something the ancient philosophers obsessed about: Is preemptive punishment justified? Even when you know the details and extent of the future crime? "Minority Report" asks this question directly, in a more judicial context. "Looper" brings it around and makes it personal: If you know something terrible will happen, how responsible are you to prevent it, given the chance?

In "Looper," Joseph Gordon-Levitt and Bruce Willis (who both play the same character, thirty years apart) have different takes on the answer to that question. But the film doesn't leave us feeling certain that either of them was entirely right or entirely wrong. The moral answer to the age-old question remains murky, and we've been left to continue pondering it for ourselves.

I think that's the profound sensation I felt when I left the theater: the sense that a profound question about responsibility and fate had been asked, but not answered. It's not an unsatisfying sensation, because the story itself concludes clearly and cleanly, but it's unfamiliar, because most films these days take pains to come to a moral footing when their narratives conclude. Johnson's background in Noir ("Brick" is firmly rooted in the '40s genre) may have something to do with the moral open-endedness, but "Looper" doesn't suggest a dark world without morality. It suggests a world much like our own, where morality exists and motivates us, but doesn't make itself clear when the difficult decisions need to be made.

-AzS

Time travel represents a unique narrative opportunity (paradoxes aside) to push "what if?" scenarios to the extreme. What if you could go back in time to rectify a past wrong? To make bad things better? To intercede on your own behalf, or on behalf of others?

Undeveloped narratives (such as my own film, "Paradox in Purple", made in 11th grade) focus on the paradox itself, using it as a cautionary mechanism: don't mess with the past (as if we could!) lest your changes come back to haunt you! In real terms, it's a caution not to worry ourselves with the past, since the path that leads to our present is complex beyond our capacity to comprehend. The paradox-centric time travel narrative is there to pacify those of us who wish we had done things differently, or that others had done things differently on our behalf. We rest easier at night knowing that our present coordinates are the sum of far more complex elements than a few moments in time.

"Looper" is a mature time travel narrative. The paradox is not the obsessive focus of the story, and the painful lesson that the past is unchangeable is not a part of this film. "Looper" avoids the issue by keeping its story rooted in "the past". "The Terminator" does the same thing, posing the same fundamental question: If we know the future, and we know it's bad, what is the extent of our responsibility to change it? In other words, rather than asking "what if we could change the past?" the film asks "what if we could change the future?"

What I like about "Looper" is that it pushes this question to such an extreme that it becomes, yet again, something the ancient philosophers obsessed about: Is preemptive punishment justified? Even when you know the details and extent of the future crime? "Minority Report" asks this question directly, in a more judicial context. "Looper" brings it around and makes it personal: If you know something terrible will happen, how responsible are you to prevent it, given the chance?

In "Looper," Joseph Gordon-Levitt and Bruce Willis (who both play the same character, thirty years apart) have different takes on the answer to that question. But the film doesn't leave us feeling certain that either of them was entirely right or entirely wrong. The moral answer to the age-old question remains murky, and we've been left to continue pondering it for ourselves.

I think that's the profound sensation I felt when I left the theater: the sense that a profound question about responsibility and fate had been asked, but not answered. It's not an unsatisfying sensation, because the story itself concludes clearly and cleanly, but it's unfamiliar, because most films these days take pains to come to a moral footing when their narratives conclude. Johnson's background in Noir ("Brick" is firmly rooted in the '40s genre) may have something to do with the moral open-endedness, but "Looper" doesn't suggest a dark world without morality. It suggests a world much like our own, where morality exists and motivates us, but doesn't make itself clear when the difficult decisions need to be made.

-AzS

Friday, September 21, 2012

Musing Pictures: The Master

In PT Anderson's "The Master", one thing that stood out to me was the way certain scenes were staged. On several occasions throughout the film, we are presented with a small crowd of ten or twenty people, and out of that crowd, one character stands out sharply. Often, these are point-of-view shots, and the character that stands out does so by looking at the camera, at us, at the character through which we're observing the scene. It's an interesting and subtly unnerving effect, one I expect to see more in horror/thrillers than in dramas.

In general, the blocking and framing in "The Master" is interesting to note. The aspect ratio is not especially wide (and why should it be? we're generally looking at people, rather than scenery) so groups of people have to clump together. Some scenes reminded me of the way John Ford would clump his characters, always a little too close to seem real, but always just far enough apart to avoid "staginess".

-AzS

Thursday, September 13, 2012

Musing Pictures: "Muslim Innocence"

This is not a proper "Musing Pictures" post, as I have not seen the film about which I write. I've read enough about it to know what it is. I feel the need to comment here in response to some of the public discourse about the "film" that recently sparked outrage and murder in the Arab world. The issues are enormous, and far too broad for a modest filmmaker to tackle. I don't approach politics in this blog, as a rule, and I don't intend to start now. This goes way beyond politics.

First, the background in brief: A "film" of mysterious origins (apparently financed by "100 Jews" and directed by an "Israeli American") is translated or subtitled in to Arabic and shared with the Arab world. It depicts the Prophet Mohammed, which, in and of itself, offends many Muslims. It depicts the Prophet in coarse, unflattering ways. This really pisses some people off. They express their frustration in protest. Some of the protesters get very violent, killing people who have nothing to do with the film.

There are two reactions to this:

1) People say "Yuck! Islamic people are barbaric!"

2) People say "Yuck! The filmmakers are morons!"

Neither statement really approaches the depth or seriousness of what's going on.

Film is powerful. We learned this from the Nazis. Many of the world's more repressive governments work hard to suppress this kind of mass communication. TV and movies are heavily censored and controlled. Whoever made this controversial "Film" knew this and exploited it.

I get in to murky territory here, because people have been killed over this stupid "Film". I can't address the tendency toward brutality in the Muslim world, except to say that it angers me. If "accepting Muslim culture" means accepting Muslim violence, I reject Muslim culture. Of course, I don't believe Muslim culture necessitates Muslim violence, but it certainly seems to embrace it in some parts of the Islamic world.

In the West, frustration is ideally expressed not by violence, but by communication. Op-eds, essays, art. Even strikes, marches and sit-ins are much more about communication than they are about physical violence. I believe firmly in the virtues of expression-by-communication, and in the freedoms of speech, assembly, and the press that protect it.

I am proud to be a citizen of a country that embraces these freedoms, even if they result in trash. And I intend to make full use of these freedoms to point out how vile a piece of trash "Muslim Innocence" is.

The catalysts for cultural change are almost always acts of communication. Jesus spoke to his followers and changed Judaism forever. Marx wrote his Manifesto, and world politics transformed. Violence can appear to be a catalyst for change, but more likely, it inspires a hardening of attitudes, an entrenchment of opinion.

I think it's important to address problems in Islam, just as it is important to address problems in America, or in Judaism, or in any culture. I think it's necessary to address these issues with communication, rather than violence. I think there should be more brave artists willing to step up and say "hey, Islam, here's what we see when we look at you!"

First, the background in brief: A "film" of mysterious origins (apparently financed by "100 Jews" and directed by an "Israeli American") is translated or subtitled in to Arabic and shared with the Arab world. It depicts the Prophet Mohammed, which, in and of itself, offends many Muslims. It depicts the Prophet in coarse, unflattering ways. This really pisses some people off. They express their frustration in protest. Some of the protesters get very violent, killing people who have nothing to do with the film.

There are two reactions to this:

1) People say "Yuck! Islamic people are barbaric!"

2) People say "Yuck! The filmmakers are morons!"

Neither statement really approaches the depth or seriousness of what's going on.

Film is powerful. We learned this from the Nazis. Many of the world's more repressive governments work hard to suppress this kind of mass communication. TV and movies are heavily censored and controlled. Whoever made this controversial "Film" knew this and exploited it.

I get in to murky territory here, because people have been killed over this stupid "Film". I can't address the tendency toward brutality in the Muslim world, except to say that it angers me. If "accepting Muslim culture" means accepting Muslim violence, I reject Muslim culture. Of course, I don't believe Muslim culture necessitates Muslim violence, but it certainly seems to embrace it in some parts of the Islamic world.

In the West, frustration is ideally expressed not by violence, but by communication. Op-eds, essays, art. Even strikes, marches and sit-ins are much more about communication than they are about physical violence. I believe firmly in the virtues of expression-by-communication, and in the freedoms of speech, assembly, and the press that protect it.

I am proud to be a citizen of a country that embraces these freedoms, even if they result in trash. And I intend to make full use of these freedoms to point out how vile a piece of trash "Muslim Innocence" is.

The catalysts for cultural change are almost always acts of communication. Jesus spoke to his followers and changed Judaism forever. Marx wrote his Manifesto, and world politics transformed. Violence can appear to be a catalyst for change, but more likely, it inspires a hardening of attitudes, an entrenchment of opinion.

I think it's important to address problems in Islam, just as it is important to address problems in America, or in Judaism, or in any culture. I think it's necessary to address these issues with communication, rather than violence. I think there should be more brave artists willing to step up and say "hey, Islam, here's what we see when we look at you!"

If there were more protest, more communication, more depictions of Mohammed, the cheap, pointless, hateful stuff would go unnoticed.

The reason I hate "Muslim Innocence" is that it's downright dishonest. It's dishonest in two ways.

-Its critiques of Islam are not critiques of Islam at all, but of an imaginary Islam, an Islam that was made up by people who didn't know how to express their frustrations with the real thing. It's an Islam made up by people who don't know enough about the real Islam to criticize it properly. Much like anti-Semitism, or any other brand of cultural bigotry, it relies on a hateful description that is, in and of itself, false.

-It hides behind other scapegoats. This is something I first encountered in Roger Ebert's essay: http://blogs.suntimes.com/ebert/2012/09/a_statement_and_a_film.html

The claim was made (by who? I'm not sure) that the film was directed by an "Israeli American", and was financed (to the tune of $5M... I'm a filmmaker, I know what $5M looks like on screen...) by one hundred Jews. 100 Jews. It's a typological number, not literal. The filmmaker's name, Sam Bacile (an Israeli name?) becomes something else when shortened to Monsieur Bacile (or, "M Bacile" -- read it out loud if you still don't get it). A Jewish (Israeli-American) imbecile? Directing a movie funded by 100 Jews (because Jews have money, right?) This seems like an obvious plot to use Jews as scapegoats. Here's the intended outcome: Jews look like money-mad bigots who spend their money on stupid projects (instead of jobs? the economy?) to piss off Muslims who then kill Christians. Christians get their holy war, and if it goes wrong, they can blame the Jews for inciting it. Roger Ebert may turn out to be wrong about this. The film might turn out to have been made by an Israeli-American, and funded by one hundred Jews. If that turns out to be the case, you can be sure that hundreds of thousands of Jews will rise up in protest.

And that brings me back around. How many Muslims will rise up to protest the murders in Libya?

This "Film" deserves serious protest, but not violence. Certainly nothing even close to murder. Not even of the people involved, let alone people who had nothing to do with its production and distribution.

The violence in Libya deserves more protest, too, and if the full force of the message requires that the Prophet be depicted, by all means, depict the Prophet! But my plea to artists and communicators everywhere is to remember that the most effective communication is informed communication. Understand the problem you're addressing and THEN protest the hell out of it! And be up-front about it, too. Don't hide behind pseudonyms. Don't scapegoat the Jews (or anyone!) Cowardly protest is no protest at all.

And so, for the first time on this blog, I sign with more than my initials,

-Arnon Z. Shorr

Thursday, August 16, 2012

Musing Pictures: The Bourne Legacy

There's a certain confidence necessary for a truly effective action sequence. Action sequences need room to breathe, moments of quiet that allow the viewer to get oriented, to see the danger, and to register its potential.

I thought of this a lot while watching "The Bourne Legacy". The initial trilogy (of which I'd say this is a spin-off) included some of the best action sequences of the last half-century, so this one had a lot to live up to. Without dissecting action sequences shot-by-shot (an interesting, albeit lengthy exercise), here's where I think the originals got it right, and where the new one gets it wrong.

Take a look at the pacing and lensing of this now-famous scene from "The Bourne Identity":

I think it's remarkable to note that many of the shots in this sequence last for more than a second. Also, the action is very clear: each shot conveys something about the progression of the sequence: where they're going, where they are in relation to the people chasing them, where the obstacles are, and what happens when they collide.

Also, we're kind of set-back from the action, not too far, but just enough to see most of what we're looking at. We see most of the car, or most of a motorcycle.

There's a tendency to come in really close in a lot of "imitation" chase sequences, and to cut very quickly. The reasoning behind this is that a chase is frantic, so, in a way, that frenetic energy needs to be conveyed to the audience.

I think "The Bourne Identity" conveys this energy well (unsteady camera, lots of movement, etc.), but it never loses track of the fact that this is, after all, a part of a narrative, and that as narrative, it must remain clear!

If we can tell what's going on at all times (even if we don't know how the hero is going to get out of the situation), we're on the edge of our seats. Once we lose track of the action, and it becomes a jumble of quick, shaky close-ups, we lose our focus, and instead of anxiously watching the hero figure it out, we find ourselves forced to figure it out on our own.

In a nutshell, I think that's the main flaw in the "Bourne Legacy" action sequences. They're shot too close and cut too fast, aiming for a visceral, rather than narrative experience. See the movie, and let me know what you think.

-AzS

I thought of this a lot while watching "The Bourne Legacy". The initial trilogy (of which I'd say this is a spin-off) included some of the best action sequences of the last half-century, so this one had a lot to live up to. Without dissecting action sequences shot-by-shot (an interesting, albeit lengthy exercise), here's where I think the originals got it right, and where the new one gets it wrong.

Take a look at the pacing and lensing of this now-famous scene from "The Bourne Identity":

I think it's remarkable to note that many of the shots in this sequence last for more than a second. Also, the action is very clear: each shot conveys something about the progression of the sequence: where they're going, where they are in relation to the people chasing them, where the obstacles are, and what happens when they collide.